Arthur C. Clarke said “any sufficiently advanced technology is indistinguishable from magic.” ChatGPT feels pretty close to magic. Or at least like the first steps on the road to magic. Heralded as revolutionizing how we access data, communicate with technology, and generate information, ChatGPT has taken the world by storm amassing over 1 million users in its launch week. Writers, artists, programmers, and anyone else whose livelihood depends on creating a digital asset are asking themselves either “how does this help me?”, or more pessimistically, “how does this endanger me?” At Onfido (speaking as fraud prevention experts) we are asking “how will this make identity fraud easier?”

What is ChatGPT?

AI generation tools have made significant strides in the past 5 years — and ChatGPT from OpenAI is newly released and still in beta. The user inputs a 'prompt' in plain English and the AI returns a full, grammatically correct and often referenced paragraph. It's conversational. It’s strikingly accurate. And it was instrumental in researching and writing this article. Currently there is no barrier of entry while in beta, although it’s likely to be monetized once released generally.

ChatGPT can answer questions on philosophy, literature, history, and computer programming. There are communities popping up to measure and applaud the best responses, and best approaches to writing prompts that extract the most from the system. Our core question as a fraud detection company, was how fraudsters could use the system to impersonate others. So we did the natural thing — and asked ChatGPT.

How could ChatGPT be used to perpetrate identity fraud?

It is important to note that ChatGPT is a language model and does not have the ability to perpetrate identity fraud on its own. However, if someone were to use ChatGPT to generate fake information or documents, they could potentially use that information to commit identity fraud. For example, they could use ChatGPT to generate a fake identity document, such as a driver’s license or passport, and then use that document to pose as someone else. This could allow them to gain access to sensitive information or financial accounts. It is important to always verify the authenticity of any documents or information that you receive, and to never share personal information with anyone unless you are certain of their identity.

On the whole, pretty impressive. It aligned itself with our own prediction, that there will come a day when an AI-based tool will generate fraudulent documents — but interestingly wasn’t aware that image generation isn’t something that ChatGPT itself currently supports. For that we’d have to turn to the other AI generation tool that’s recently taken the world by storm, DALL-E (also from OpenAI). Over to ChatGPT to explain how.

What is DALL-E?

DALL-E is a large language model developed by OpenAI. It is named after the artist Salvador Dali and the fictional character Wall-E. DALL-E is capable of generating images from text descriptions, using a neural network with 12 billion parameters. It was trained on a dataset of text-image pairs, allowing it to learn the relationship between text and visual data. DALL-E is designed to demonstrate the capabilities of state-of-the-art language models and their potential applications in generating diverse and creative outputs. It can be used to create a wide range of images, from photorealistic to highly stylized, based on the input text.

Thanks ChatGPT. One of the largest limitations of the DALL-E current system (and those like it) is how it handles text generation. Even the most specific prompts, like “a t-shirt with ‘AI is good’ written on it”, returns garbled results.

Another key limitation is the system’s ability to accurately render human faces. So it’s hopefully safe to say, we’re a number of years away from systems like ChatGPT or DALL-E returning sophisticated fakes and forgeries of documents, or deep-faked biometrics from simple prompts. But, it’s also safe to say that one day they will. After all, fraud is big business, and the market demands it.

In recent years we’ve seen the explosion of fraud-as-a-service technologies that sell custom-built tools to fraudsters — today these services range from specific tools such as 2FA interception bots, to full suite packages that include duplicating entire websites on look-a-like URLs. I have no doubt that one day fraud focused AI generation tools will be on the pricelist.

What is the impact of ChatGPT?

ChatGPT feels like the most intuitive encyclopedia ever (not) written. The most impressive thing about it isn’t the accuracy of the information being returned, but the way in which it returns it. Using ChatGPT feels natural versus finding information on Wikipedia. Similar to the iPhone touchscreen, it feels like a revolution in how we’ll access information — one that makes it faster to research, draft, and create. But one that for now, still requires human input.

When it comes to fraud its applications are (currently) limited. For example, it’s now far quicker for people to create fake chat logs, website copy, and social profiles than if they were to do so manually. But that’s not to say more sophisticated applications of fraud won’t develop.

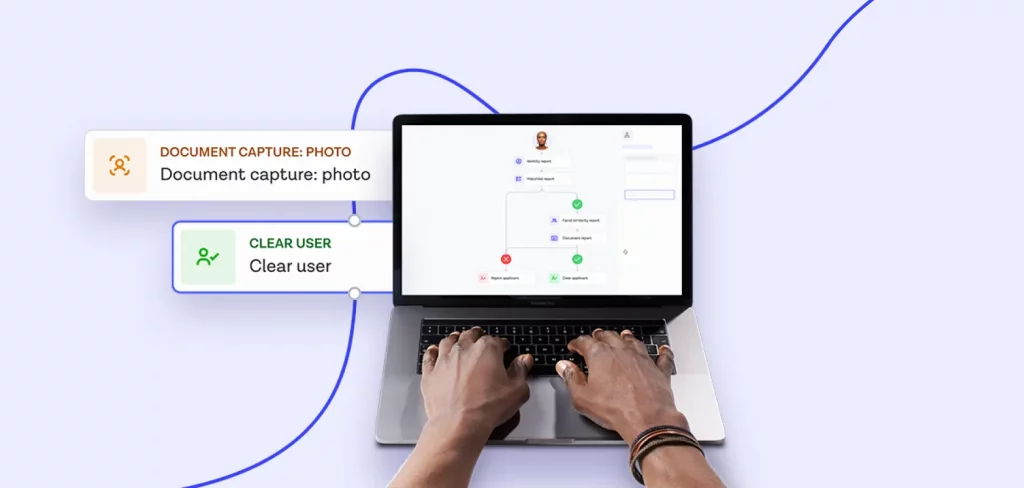

Looking ahead, it’s not all doom and gloom. At Onfido, we’ve stayed ahead of complex biometric verification spoofs like 3D masks and display attacks by launching Onfido Motion, our new liveness technology. We’ve also introduced passive fraud detection signals, like device intelligence, to give our customers a fuller view of their potential customer without introducing friction. We’ve done this because fraud is multi-vectoral, and best tackled with multiple vectors. To this end, as fraud gets smarter, so does detection — and naturally, technology is already being developed to identify writing generated by ChatGPT. So, to answer our original question, will AI generative tools bring about the fraud apocalypse? No. But they will change how we build fraud detection tools in the future.

Our Identity Fraud report analyzes the fraud landscape based on our proprietary data, and examines the latest techniques and prevention tactics.